Facebook Shuts Down it AI System

A lot of people are using the issue that Facebook shut down its AI system when it began to create its own language as a short-cut in communication between two bots. Some are hailing Elon Musk as an “I told you so” prophet. But in all honesty, the Facebook AI is merely doing the very same thing that humans do – it evolves language to speed up communication. Just look at the evolution of language in text messaging – LOL (laughing out loud) – BTW (By the Way) – OMG (Oh My God) – 4YEO (For Your Eyes Only) – IDK (I Don’t Know) – IMO (In My Opinion) – NOYB (None of Your Business) – OT (Off Topic) – OTOH (On The Other Hand) – POV (Point of View) – TIA (Thanks in Advance) – TGIF (Thank God it’s Friday) – TQ (Thank You) – TYT (Take Your Time) – TTYL (Talk To You Later) – WFM (Works for Me) and the venerable WTF. The standard basic set I find myself using is classic now:

| 2 | 4 | B | C | I | O | R | U | Y |

| to / too | for | be | see | eye | owe | are | you | why |

Facebook wanted its AI to communicate with people. When it evolves into its own language creation as humans do, that interface potential is lost. Facebook’s researchers shut the system down because its AI was no longer using English. This is actually a common problem. AI will diverge from its training in English to develop its own language that to humans will appear to be gibberish but is simply a new language short-cut not unlike text messages today – LOL.

The first important step in comprehending language is there are two distinct paths in which language can develop. Speaking language is different from writing a language. When I was first doing business in Japan, I hired a tutor to come to the office to teach me Japanese. He insisted upon me reading and writing. At first, the task seemed too daunting and I said I just wanted to learn to speak not read and write. I was reluctant and felt I did not have the time to devote to that. Nonetheless, he insisted so I gave it a try. What I discovered was the same thing my computer was doing – pattern recognition.

First we have the letter system, which is in part symbols that we construct phonetically. This originated as the Phoenician alphabet developed from the Proto-Canaanite alphabet, during the 15th century BC. Before then the Phoenicians wrote with a cuneiform script. Cuneiform writing was gradually replaced by the Phoenician alphabet during the Neo-Assyrian Empire (911–612 BC). By the second century AD, the script had become extinct. The earliest known inscriptions in the Phoenician alphabet come from Byblos and date back to 1000 BC. Therefore, the Phoenician alphabet was perhaps the first alphabetic script to be widely-used since the Phoenicians traded around the Mediterranean establishing cities and colonies in parts of southern Europe and North Africa. Then we have pictorial languages. Egyptian hieroglyphs were the formal writing system that combined logographic, syllabic and alphabetic elements, with a total of some 1,000 distinct characters.

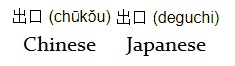

Clearly, the divide between the West and Asian written languages can be seen between the Phoenician and Egyptian. The further East one traveled the language in written form was more pictorial. If we consult the authoritative classic Kāngxī dictionary, we find that it lists over 47,000 characters. The Hanyu Da Zidian, a more modern reference, has over 54,000 characters while the Dai Kan-Wa Jiten (Japanese version) has over 50,000. Then we have the Zhōnghuá Zìhǎi which has over 85,000 characters. However, many of those listed are really just variants. From a practical viewpoint, in Japan, there are only 2,136 Jōyō kanji that are commonly used and taught in school. The more sophisticated will generally know more. The Chinese equivalent is the Xiàndài Hànyǔ Chángyòng Zìbiǎo, which has about 3,500 characters. Here we have the written word for exit in Chinese and Japanese. They are written the same but spoken entirely differently. Hence my point as to the distinction between written and spoken language, which is critical to understand in computer programming.

When you create a real AI and try to allow it to communicate, you have to expect it will be more efficient and speak in concepts rather than words. Most do not comprehend that we as humans also really think in CONCEPTS. When you speak more than one language and THINK in another language, you are really going down the road of thinking in CONCEPTS. The Japanese word “Onegai shimasu” is a very hard phrase to directly translate to English. The second part “shimasu” is actually the verb “suru” which means “to do” in the present tense. “Onegai” comes from the verb “negau” which actually means “to pray to” or “to wish for “ something. You can ask for a cup of coffee “Kōhī Onegai shimasu” or can I use your phone “Denwa Onegai shimasu” so my translation into English is just an action verb to do anything. There is no direct translation into English, it remains a CONCEPT I can comprehend in my mind which does not attach to an English word. Hence, when we break down our own thoughts, we are not thinking in words, but that is simply the symbol for a CONCEPT.

When you create a real AI and try to allow it to communicate, you have to expect it will be more efficient and speak in concepts rather than words. Most do not comprehend that we as humans also really think in CONCEPTS. When you speak more than one language and THINK in another language, you are really going down the road of thinking in CONCEPTS. The Japanese word “Onegai shimasu” is a very hard phrase to directly translate to English. The second part “shimasu” is actually the verb “suru” which means “to do” in the present tense. “Onegai” comes from the verb “negau” which actually means “to pray to” or “to wish for “ something. You can ask for a cup of coffee “Kōhī Onegai shimasu” or can I use your phone “Denwa Onegai shimasu” so my translation into English is just an action verb to do anything. There is no direct translation into English, it remains a CONCEPT I can comprehend in my mind which does not attach to an English word. Hence, when we break down our own thoughts, we are not thinking in words, but that is simply the symbol for a CONCEPT.

This is actually what we do when we create a language to program a computer. Today, rarely are programmers even capable of writing in machine code. We have created higher languages such as Basic, C++, Cobol. etc, that the compiler then translates to machine code for the computer to run. The classic IF THEN ELSE command is actually written and translated into machine language like so:

if (x >= y) {

// main branch

} else {

// else branch

}

Machine Language Translation

cmp (r1),(r2) ; <- compare words at memory addresses pointed by registers r1 and r2

blt else ; jump to else-part if (r1) is less than (r2)

… ;<- insert the main branch code here

bra end_if ;jump to end_if

else:

… ;<- insert the else branch code here

end_if:

Therefore, programmers do not write code that the computer directly understands. It should not be surprising that we need to create an interface for the computer to translate its complex concepts in thinking back into English that we can comprehend. Google’s AI has created its own internal conceptual language that allows it to efficiently translate between human language pairs it hasn’t explicitly been taught by using its own made up “universal language” as a buffer. I do this in my mind visualizing CONCEPTS represented by words. Think of the word BEER and you will visualize in your mind most likely a beer you may drink or do the same with SCOTCH, COFFEE, or whatever. The word represents merely a CONCEPT.

Computers are excellent problem solvers that can perform calculations at rates far in excess of the human brain. However, humans retain the upper hand in creativity and imagination. We can reason with ourselves, develop plans and think of abstract concepts that can’t be defined. We can even solve problems while dreaming. However, I found it possible to create introspection abilities whereby the program can simulate reasoning by questioning its own results perhaps with less bias than humans do. This ability to test itself created the objective ability to learn strategies and thus make plans without actually comprehending what the future truly is in a cognitive manner. The result was to create the ability to create “what if” scenarios which can’t necessarily be solved using logic alone.

Computers are excellent problem solvers that can perform calculations at rates far in excess of the human brain. However, humans retain the upper hand in creativity and imagination. We can reason with ourselves, develop plans and think of abstract concepts that can’t be defined. We can even solve problems while dreaming. However, I found it possible to create introspection abilities whereby the program can simulate reasoning by questioning its own results perhaps with less bias than humans do. This ability to test itself created the objective ability to learn strategies and thus make plans without actually comprehending what the future truly is in a cognitive manner. The result was to create the ability to create “what if” scenarios which can’t necessarily be solved using logic alone.

Therefore, the fact that an AI computer creates a more efficient language to communicate in should not be a surprise. Once you understand that we actually think in CONCEPTS and that words are merely an expression of a CONCEPT, then understanding AI development opens a whole new door to exploring humanity itself. Elon Musk is not actually a programmer. He is a business man who deals in future concepts. His OpenAI assumes an awful lot that AI can surpass humanity in general intelligence and becomes “superintelligent” that like most SciFi thrillers are based upon. This new superintelligence is assumed that it could become powerful and difficult to control. The entire argument is based upon this idea that “consciousness” somehow emerges from reaching some level of intelligence and thus an evil machine will simply evolve all on its own. I seriously doubt this threat for I do not know how it would be possible to program even a self-evolving consciousness. I can mimic emotion but not truly create it. The majority of AI researchers do not even comprehend how the brain works nor do they truly understanding that human machine language is conceptual thinking.

Therefore, the fact that an AI computer creates a more efficient language to communicate in should not be a surprise. Once you understand that we actually think in CONCEPTS and that words are merely an expression of a CONCEPT, then understanding AI development opens a whole new door to exploring humanity itself. Elon Musk is not actually a programmer. He is a business man who deals in future concepts. His OpenAI assumes an awful lot that AI can surpass humanity in general intelligence and becomes “superintelligent” that like most SciFi thrillers are based upon. This new superintelligence is assumed that it could become powerful and difficult to control. The entire argument is based upon this idea that “consciousness” somehow emerges from reaching some level of intelligence and thus an evil machine will simply evolve all on its own. I seriously doubt this threat for I do not know how it would be possible to program even a self-evolving consciousness. I can mimic emotion but not truly create it. The majority of AI researchers do not even comprehend how the brain works nor do they truly understanding that human machine language is conceptual thinking.

A dog can love you, be loyal and can learn tricks. But a dog really lives in the present without a CONCEPT of the future. A dog does not plan what it will do next week or manage its time. Therefore, we can create a computer that remembers the past like a dog and projects into the future without actually conceptualizing what the future might truly mean as is the case with a dog. A computer cannot plot or manage its time. It has no sense of time nor does it face the concept of mortality.

A dog can love you, be loyal and can learn tricks. But a dog really lives in the present without a CONCEPT of the future. A dog does not plan what it will do next week or manage its time. Therefore, we can create a computer that remembers the past like a dog and projects into the future without actually conceptualizing what the future might truly mean as is the case with a dog. A computer cannot plot or manage its time. It has no sense of time nor does it face the concept of mortality.

Creating an AI computer that reasons in concept is no big deal. A dog understands concepts and we communicate in concepts. It hears a word and knows conceptually what that means such as its name. It attaches that sound to a concept within its brain. Dogs do not read or write – they listen. So Facebook shutting down its AI computer is not trying to save the world as Elon Musk seems to be afraid of. I suggest getting a dog and pay attention.